Hi Ho Hi Ho – It’s off to work I go

A Day In the Life of a DBA

Digging around like a Manic Miner is something that I like to do. I’m a Database Administrator for Claremont and one of the tech guys who evolve their skills in order to provide the best service to Claremont’s clients.

Here’s a particular challenge relating to Oracle’s E-Business Suite’s OLPT database – and more specifically, the task of understanding the impact of archiving on a system. Time to put the pickaxe to good use and go mining.

When working on an extremely large multi-terrabyte OLTP E-Business Suite database, it can sometimes be taken for granted that the amount of archive log files produced is always going to be high. However, on one particularly busy day I was surprised to see that the amount produced had gone up from the usual 40-50Gb to 90-100Gb. Was the system just getting busy? We know that user volumes had gone up, as had their transaction load, but this just seemed too high.

An Investigation

So if you’re interested in digging around like a Manic Miner, this is where to start:

Configure utl file dir to include a directory that will store a ‘Log Miner’ dictionary file:

UTL_FILE_DIR=/oradata/archivelog

Create the dictionary file. Note that the file created is not insignificant in size.

BEGIN

DBMS_LOGMNR_D.build (

dictionary_filename => ‘logminerdict.ora’,

dictionary_location => ‘/oradata/archivelog’);

END;

/

Add log files for analysis:

BEGIN

DBMS_LOGMNR.add_logfile (

options => DBMS_LOGMNR.new,

logfilename => ‘/oradata/archivelog/o1_mf_1_400300_ftfg1657_.arc’);

DBMS_LOGMNR.add_logfile (

options => DBMS_LOGMNR.addfile,

logfilename => ‘/oradata/archivelog/o1_mf_1_400301_ftfg3r12_.arc’);

… etc …

END;

/

Log Miner

Note that ‘Log Miner’ is smart enough to allow you to take archive logs from one database and examine them on another, removing any concerns about putting any unnecessary load on your production system. So, the above steps could be carried out on another server.

Once you’ve added the archive logs, use one of the methods below to begin their analysis:

— Start using all logs

BEGIN

DBMS_LOGMNR.start_logmnr (

dictfilename => ‘/oradata/archivelog/logminerdict.ora’);

END;

/

— Specify a time range

BEGIN

DBMS_LOGMNR.start_logmnr (

dictfilename => ‘C:\Oracle\Oradata\TSH1\Archive\TSH1\dict.ora’,

starttime => TO_DATE(’01-JAN-2001 00:00:00′, ‘DD-MON-YYYY HH:MI:SS’),

endtime => TO_DATE(’01-JAN-2001 10:00:00′, ‘DD-MON-YYYY HH:MI:SS’));

END;

/

— Specifiy SCN range

BEGIN

DBMS_LOGMNR.start_logmnr (

dictfilename => ‘C:\Oracle\Oradata\TSH1\Archive\TSH1\dict.ora’,

startscn => 100,

endscn => 150);

END;

/

Finally, produce the magic result that tells you what used all those segments in the archive logs:

SELECT seg_owner, seg_name, count(*) AS Hits

FROM v$logmnr_contents

WHERE seg_name NOT LIKE ‘%$’

GROUP BY seg_owner, seg_name

order by 3 asc;

Identifying the faults

In this case we were able to identify faults in:

- Excessive log messages being produced from the SOA EBS Database Adaptor which by default is enabled with a log level of ‘Event’, instead of the more common values of ‘Error’ or ‘Unexpected.

- A change in the EBS Price Modifier resulted in 99% of price calls producing an error also in FND_LOG_MESSAGES.

- Over 2000 emails stuck in an Oracle Email pre-processing table continually being re-processed every 5 minutes.

Various fixes and configuration changes resulted, and the “Purge Alerts and Logs” request which previously had 4 million records to purge daily, now has little or no work, resulting in a large reduction in deleted data blocks heading for the redo logs. The biggest win was the stuck emails, needlessly reprocessing in Email Center which resulted in a new bug raised with Oracle.

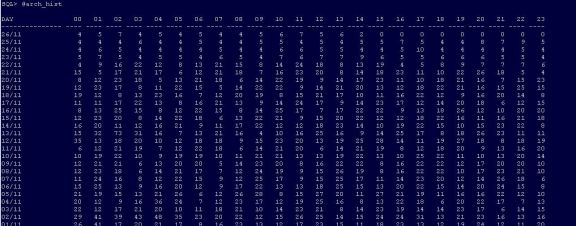

What impact did it have? Astonishingly the resulting fix reduced our redo volumes by 75%! The image below shows the number of archive log files produced per day, per hour timeslot. The fixes were applied and the number of files drops very significantly from then onwards.

All these bugs had gone unnoticed and produced unnecessary load on the system and the backups. The resulting fix was so significant I had to double-check the database was still in archive log mode to believe it! End result, one happy customer with emails processing faster than ever, and SOA transactions going more smoothly. Not to mention one happy DBA team managing the backups!